openQA starter guide

1. Introduction

openQA is an automated test tool that makes it possible to test the whole installation process of an operating system. It uses virtual machines to reproduce the process, check the output (both serial console and screen) in every step and send the necessary keystrokes and commands to proceed to the next. openQA can check whether the system can be installed, whether it works properly in 'live' mode, whether applications work or whether the system responds as expected to different installation options and commands.

Even more importantly, openQA can run several combinations of tests for every revision of the operating system, reporting the errors detected for each combination of hardware configuration, installation options and variant of the operating system.

openQA is free software released under the GPLv2 license. The source code and documentation are hosted in the os-autoinst organization on GitHub.

This document describes the general operation and usage of openQA. The main goal is to provide a general overview of the tool, with all the information needed to become a happy user.

For a quick start, if you already have an openQA instance available you can refer to the section Cloning existing jobs - openqa-clone-job directly to trigger a new test based on already existing job. For a quick installation refer directly to Quick bootstrapping under openSUSE or Container based setup.

For the installation of openQA in general see the Installation Guide, as a user of an existing instance see the Users Guide. More advanced topics can be found in other documents. All documents are also available in the official repository.

2. Architecture

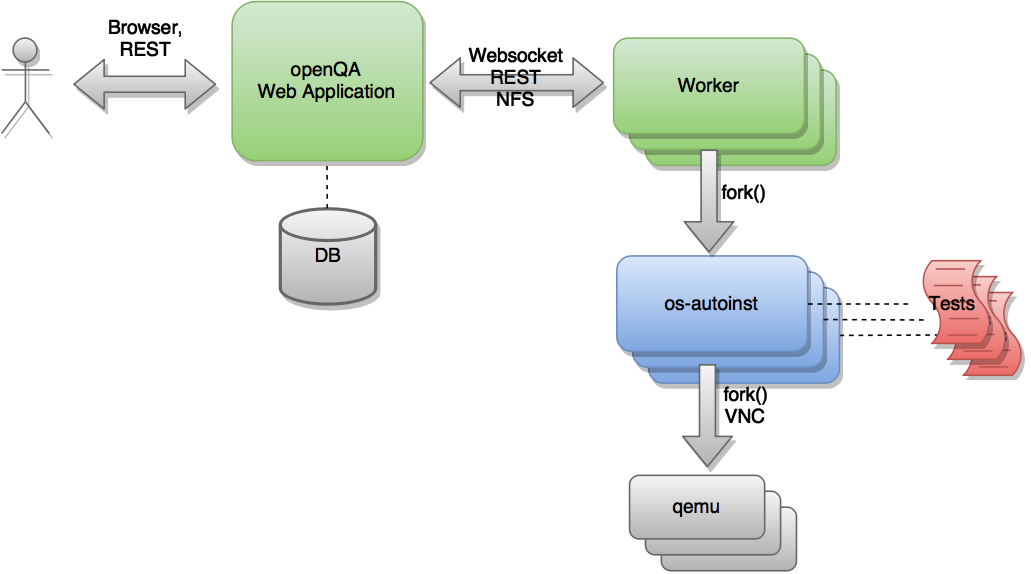

Although the project as a whole is referred to as openQA, there are in fact several components that are hosted in separate repositories as shown in the following figure.

The heart of the test engine is a standalone application called 'os-autoinst' (blue). In each execution, this application creates a virtual machine and uses it to run a set of test scripts (red). 'os-autoinst' generates a video, screenshots and a JSON file with detailed results.

'openQA' (green) on the other hand provides a web based user interface and infrastructure to run 'os-autoinst' in a distributed way. The web interface also provides a JSON based REST-like API for external scripting and for use by the worker program. Workers fetch data and input files from openQA for os-autoinst to run the tests. A host system can run several workers. The openQA web application takes care of distributing test jobs among workers. Web application and workers can run on the same machine as well as connected via network on multiple machines within the same network or distributed. Running the web application as well as the workers in the cloud is perfectly possible.

Note that the diagram shown above is simplified. There exists a more sophisticated version which is more complete and detailed. (The diagram can be edited via its underlying GraphML file.)

3. Basic concepts

3.1. Glossary

| test modules |

an individual test case in a single perl module file, e.g. "sshxterm". If not further specified a test module is denoted with its "short name" equivalent to the filename including the test definition. The "full name" is composed of the test group (TBC), which itself is formed by the top-folder of the test module file, and the short name, e.g. "x11-sshxterm" (for x11/sshxterm.pm) |

| test suite |

a collection of test modules, e.g. "textmode". All test modules within one test suite are run serially |

| job |

one run of individual test cases in a row denoted by a unique number for one instance of openQA, e.g. one installation with subsequent testing of applications within gnome |

| test run |

equivalent to job |

| test result |

the result of one job, e.g. "passed" with the details of each individual test module |

| test step |

the execution of one test module within a job |

| distri |

a test distribution but also sometimes referring to a product (CAUTION: ambiguous, historically a "GNU/Linux distribution"), composed of multiple test modules in a folder structure that compose test suites, e.g. "opensuse" (test distribution, short for "os-autoinst-distri-opensuse") |

| needles |

reference images to assert whether what is on the screen matches expectations and to locate elements on the screen the tests needs to interact with (e.g. to locate a button to click on it) |

| product |

the main "system under test" (SUT), e.g. "openSUSE", also called "Medium Types" in the web interface of openQA |

| job group |

equivalent to product, used in context of the webUI |

| version |

one version of a product, don’t confuse with builds, e.g. "Tumbleweed" |

| flavor |

a specific variant of a product to distinguish differing variants, e.g. "DVD" |

| arch |

an architecture variant of a product, e.g. "x86_64" |

| machine |

additional variant of machine, e.g. used for "64bit", "uefi", etc. |

| scenario |

A composition of

|

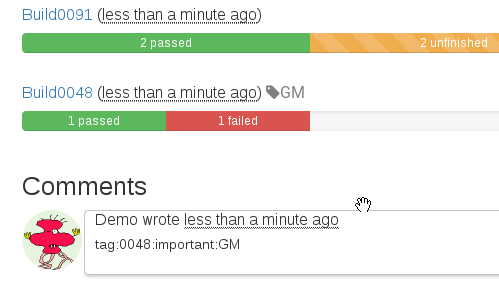

| build |

Different versions of a product as tested, can be considered a "sub-version" of version, e.g. "Build1234"; CAUTION: ambiguity: either with the prefix "Build" included or not |

3.2. Jobs

One of the most important features of openQA is that it can be used to test several combinations of actions and configurations. For every one of those combinations, the system creates a virtual machine, performs certain steps and returns an overall result. Every one of those executions is called a 'job'. Every job is labeled with a numeric identifier and has several associated 'settings' that will drive its behavior.

A job goes through several states. Here is (an incomplete list) of these states:

-

scheduled Initial state for newly created jobs. Queued for future execution.

-

setup/running/uploading In progress.

-

cancelled The job was explicitly cancelled by the user or was replaced by a clone (see below) and the worker has not acknowledged the cancellation yet.

-

done The worker acknowledged that the execution finished or the web UI considers the job as abandoned by the worker.

Jobs in the final states 'cancelled' and 'done' have typically gone through a whole sequence of steps (called 'testmodules') each one with its own result. But in addition to those partial results, a finished job also provides an overall result from the following list.

-

none For jobs that have not reached one of the final states.

-

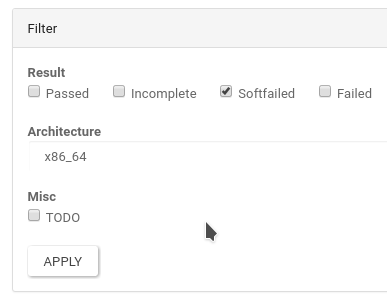

passed No critical check failed during the process. It does not necessarily mean that all testmodules were successful or that no single assertion failed.

-

failed At least one assertion considered to be critical was not satisfied at some point.

-

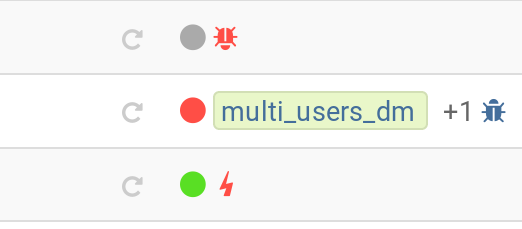

softfailed At least one known, non-critical issue has been found. That could be that workaround needles are in place, a softfailure has been recorded explicitly via

record_soft_failure(from os-autoinst) or a job failure has been ignored explicitly via a job label. -

timeout_exceeded The job was aborted because

MAX_JOB_TIMEorMAX_SETUP_TIMEhas been exceeded, see Changing timeout for details. -

skipped Dependencies failed so the job was not started.

-

obsoleted The job was superseded by scheduling a new product.

-

parallel_failed/parallel_restarted The job could not continue because a job which is supposed to run in parallel failed or was restarted.

-

user_cancelled/user_restarted The job was cancelled/restarted by the user.

-

incomplete The test execution failed due to an unexpected error, e.g. the network connection to the worker was lost.

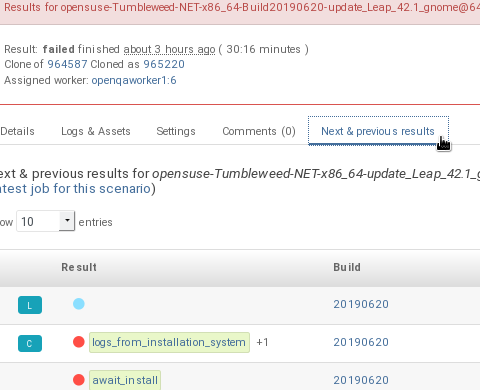

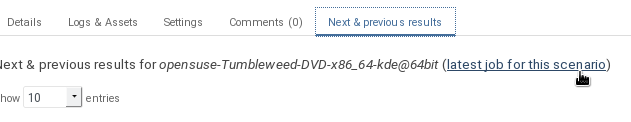

Sometimes, the reason of a failure is not an error in the tested operating system itself, but an outdated test or a problem in the execution of the job for some external reason. In those situations, it makes sense to re-run a given job from the beginning once the problem is fixed or the tests have been updated. This is done by means of 'cloning'. Every job can be superseded by a clone which is scheduled to run with exactly the same settings as the original job. If the original job is still not in 'done' state, it’s cancelled immediately. From that point in time, the clone becomes the current version and the original job is considered outdated (and can be filtered in the listing) but its information and results (if any) are kept for future reference.

3.3. Needles

One of the main mechanisms for openQA to know the state of the virtual machine is checking the presence of some elements in the machine’s 'screen'. This is performed using fuzzy image matching between the screen and the so called 'needles'. A needle specifies both the elements to search for and a list of tags used to decide which needles should be used at any moment.

A needle consists of a full screenshot in PNG format and a json file with the same name (e.g. foo.png and foo.json) containing the associated data, like which areas inside the full screenshot are relevant or the mentioned list of tags.

{

"area" : [

{

"xpos" : INTEGER,

"ypos" : INTEGER,

"width" : INTEGER,

"height" : INTEGER,

"type" : ( "match" | "ocr" | "exclude" ),

"match" : INTEGER, // 0-100. similarity percentage

"click_point" : CLICK_POINT, // Optional click point

},

...

],

"tags" : [

STRING, ...

]

}3.3.1. Areas

There are three kinds of areas:

-

Regular areas define relevant parts of the screenshot. Those must match with at least the specified similarity percentage. Regular areas are displayed as green boxes in the needle editor and as green or red frames in the needle view (green for matching areas, red for non-matching ones).

-

OCR areas also define relevant parts of the screenshot. However, an OCR algorithm is used for matching. In the needle editor OCR areas are displayed as orange boxes. To turn a regular area into an OCR area within the needle editor, double click the concerning area twice. Note that such needles are only rarely used.

-

Exclude areas can be used to ignore parts of the reference picture. In the needle editor exclude areas are displayed as red boxes. To turn a regular area into an exclude area within the needle editor, double click the concerning area. In the needle view exclude areas are displayed as gray boxes.

3.3.2. Click points

Each regular match area in a needle can optionally contain a click point.

This is used with the testapi::assert_and_click function to match GUI

elements such as buttons and then click inside the matched area.

{

"xpos" : INTEGER, // Relative coordinates inside the match area

"ypos" : INTEGER,

"id" : STRING, // Optional

}Each click point can have an id, and if a needle contains multiple click points

you must pass it to testapi::assert_and_click to select which click point

to use.

3.4. Configuration

The different components of openQA read their configuration from the following files:

-

openqa.ini,openqa.ini.d/*.ini: These files are the "web UI" config. Services providing the web interface and related services such as the scheduler are configured via these files. -

database.ini,database.ini.d/*.ini: These files are also used by services providing the web interface and related services such as the scheduler. It is used to configure how those services connect to the database. -

workers.ini,workers.ini.d/*.ini: These files are used to configure the openQA worker including its additional cache service. -

client.conf,client.conf.d/*.conf: These files contain API credentials and are used by the openQA worker and other tooling such asopenqa-cliandopenqa-clone-jobto authenticate with the web interface. One API key/secret can be configured per web UI host.

If these files are not present, defaults are used.

Example configuration files are installed under /usr/share/doc/openqa/examples

and in the

Git repository under etc/openqa.

Continue reading the next sections for where you can place the actual

configuration files and the possibility of creating drop-in configuration files.

3.4.1. Locations

All configuration files can be placed under /etc/openqa,

e.g. /etc/openqa/openqa.ini. That is where administrators are expected to

store system-wide configuration.

Configuration files are also looked up under /usr/etc/openqa where a

package maintainer

can place default values deviating from upstream defaults.

The client configuration can also be put under ~/.config/openqa/client.conf.

For development, checkout the section about customizing the configuration directory.

3.4.2. Drop-in configurations

It is recommended to split the configuration into multiple files and store these

"drop-in" configuration files in the ….d sub directory, e.g.

/etc/openqa/openqa.ini.d/01-plugins.ini. This is possible for all config

files, e.g. /etc/openqa/workers.ini.d/01-bare-metal-instances.ini and

/etc/openqa/client.conf.d/01-internal.conf can be created as well to configure

workers and API credentials. This works also on other locations, e.g.

/usr/etc/openqa/openqa.ini.d/01-plugins.ini will be found as well. Settings

from drop-in configurations override settings from the main config files.

Drop-in configurations are read in alphabetical order and subsequent files

override settings from preceding ones.

3.5. Access management

Some actions in openQA require special privileges. openQA provides authentication through openID. By default, openQA is configured to use the openSUSE openID provider, but it can very easily be configured to use any other valid provider. Every time a new user logs into an instance, a new user profile is created. That profile only contains the openID identity and two flags used for access control:

-

operator Means that the user is able to manage jobs, performing actions like creating new jobs, cancelling them, etc.

-

admin Means that the user is able to manage users (granting or revoking operator and admin rights) as well as job templates and other related information (see the the corresponding section).

Many of the operations in an openQA instance are not performed through the web interface but using the REST-like API. The most obvious examples are the workers and the scripts that fetch new versions of the operating system and schedule the corresponding tests. Those clients must be authorized by an operator using an API key with an associated shared secret.

For that purpose, users with the operator flag have access in the web interface to a page that allows them to manage as many API keys as they may need. For every key, a secret is automatically generated. The user can then configure the workers or any other client application to use whatever pair of API key and secret owned by him. Any client to the REST-like API using one of those API keys will be considered to be acting on behalf of the associated user. So the API key not only has to be correct and valid (not expired), it also has to belong to a user with operator rights.

For more insights about authentication, authorization and the technical details of the openQA security model, refer to the detailed blog post about the subject by the openQA development team.

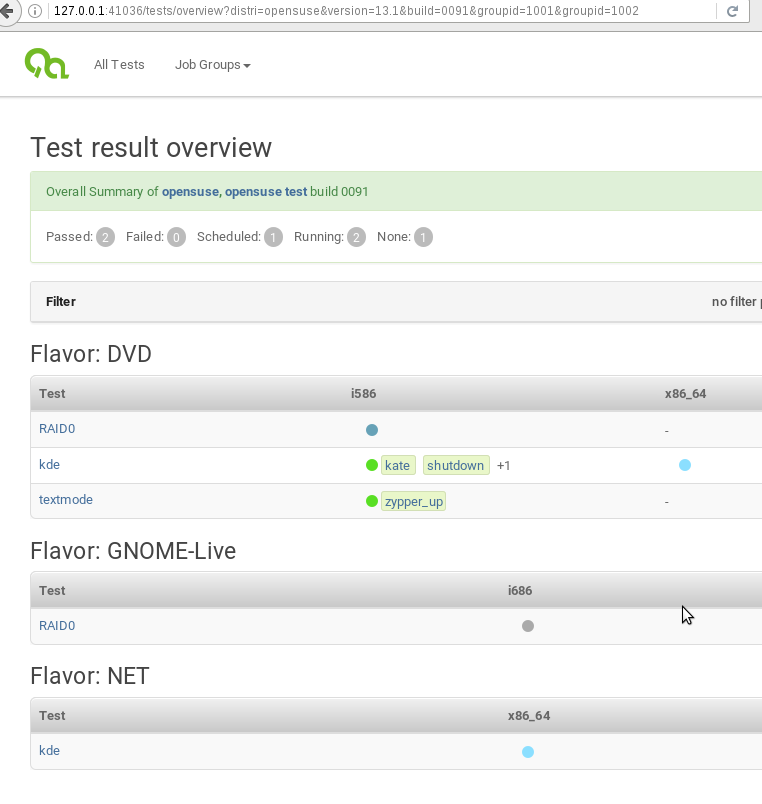

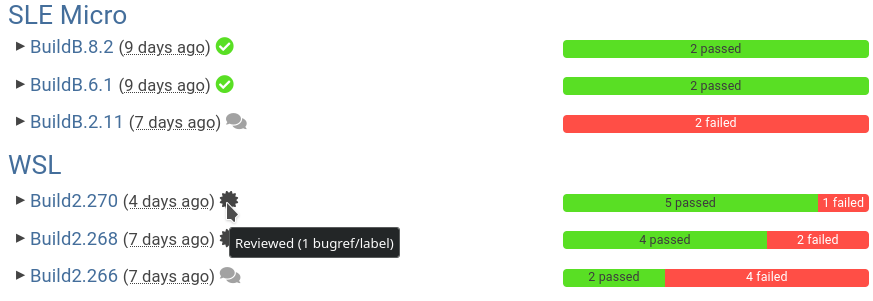

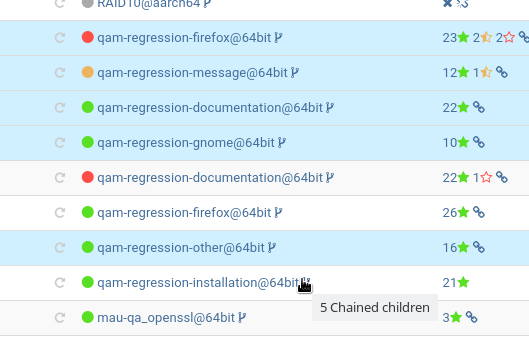

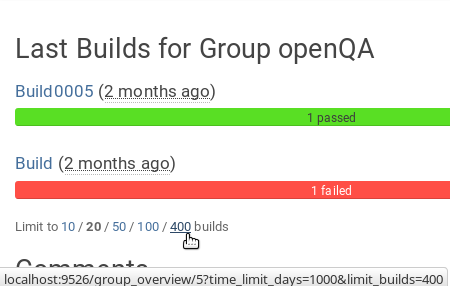

3.6. Job groups

A job can belong to a job group. Those job groups are displayed on the index

page when there are recent test results in these job groups and in the Job

Groups menu on the navigation bar. From there the job group overview pages

can be accessed. Besides the test results the job group overview pages provide

a description about the job group and allow commenting.

Job groups have properties. These properties are mostly cleanup related. The configuration can be done in the operators menu for job groups.

It is also possible to put job groups into categories. The nested groups will then inherit properties from the category. The categories are meant to combine job groups with common builds so test results for the same build can be shown together on the index page.

3.7. Cleanup

|

Important

|

openQA automatically deletes data that it considers "old" based on different settings. For example old jobs and assets are deleted at some point. |

The following cleanup settings can be done on job-group-level:

| size limit |

Limits size of assets |

| keep logs for |

Specifies how long logs of a non-important job are retained after it finished |

| keep important logs for |

How long logs of an important job are retained after it finished |

| keep results for |

specifies How long results of a non-important job are retained after it finished |

| keep important results for |

How long results of an important job are retained after it finished |

NOTE Deletion of job results includes deletion of logs and will cause the job to be completely removed from the database.

NOTE Checkout the Cleanup section for more details and the Build tagging section for how to mark a job as important.

NOTE New groups use the limits configured in the [default_group_limits] section

of the web UI configuration. Jobs outside

of any group use the limits configured in the [no_group_limits] section of the

web UI configuration.

NOTE Archiving of important jobs can be enabled. Checkout the related settings

within the [archiving] section of the config file for details.

4. Using the client script

Just as the worker uses an API key+secret every user of the client script

must do the same. The same API key+secret as previously created can be used or

a new one created over the webUI.

The personal configuration should be stored in a file

~/.config/openqa/client.conf in the same format as previously described for

the client.conf, i.e. sections for each machine, e.g. localhost.

5. Testing openSUSE or Fedora

An easy way to start using openQA is to start testing openSUSE or Fedora as they have everything setup and prepared to ease the initial deployment. If you want to play deeper, you can configure the whole openQA manually from scratch, but this document should help you to get started faster.

5.1. Getting tests

You can point CASEDIR and NEEDLES_DIR to Git repositories. openQA will

checkout those repositories automatically and no manual setup is needed.

Otherwise you will need to clone tests and needles manually. For this,

clone a subdirectory under /var/lib/openqa/tests for each test distribution

you need, e.g. /var/lib/openqa/tests/opensuse for openSUSE tests.

The repositories will be kept up-to-date if git_auto_update is enabled in

the web UI configuration (which is the

default). The updating is triggered when new tests are scheduled. For a periodic

update (to avoid getting too far behind) you can enable the systemd unit

openqa-enqueue-git-auto-update.timer.

You can get openSUSE tests and needles from

GitHub. To make it

easier, you can just run /usr/share/openqa/script/fetchneedles. It will

download tests and needles to the correct location with the correct permissions.

Fedora’s tests are also in git. To use them, you may do:

cd /var/lib/openqa/share/tests

mkdir fedora

cd fedora

git clone https://pagure.io/fedora-qa/os-autoinst-distri-fedora.git

cd ..

chown -R geekotest fedora/5.2. Getting openQA configuration

To get everything configured to actually run the tests, there are plenty of options to set in the admin interface. If you plan to test openSUSE Factory, using tests mentioned in the previous section, the easiest way to get started is the following command:

/var/lib/openqa/share/tests/opensuse/products/opensuse/templates [--apikey API_KEY] [--apisecret API_SECRET]This will load some default settings that were used at some point of time in

openSUSE production openQA. Therefore those should work reasonably well with

openSUSE tests and needles. This script uses /usr/share/openqa/script/openqa-load-templates,

consider reading its help page (--help) for documentation on possible extra arguments.

For Fedora, similarly, you can call:

/var/lib/openqa/share/tests/fedora/fifloader.py -c -l templates.fif.json templates-updates.fif.jsonFor this to work you need to have a client.conf with API key and secret, because

fifloader doesn’t support setting them on the command line. See the

openQA client section for more details on this. Also

see the docstring of fifloader.py for details on the alternative template format

Fedora uses.

Some Fedora tests require special hard disk images to be present in

/var/lib/openqa/share/factory/hdd/fixed. The createhdds.py script in the

createhdds

repository can be used to create these. See the documentation in that repo

for more information.

5.3. Adding a new ISO to test

To start testing a new ISO put it in /var/lib/openqa/share/factory/iso and call

the following commands:

# Run the first test

openqa-cli api -X POST isos \

ISO=openSUSE-Factory-NET-x86_64-Build0053-Media.iso \

DISTRI=opensuse \

VERSION=Factory \

FLAVOR=NET \

ARCH=x86_64 \

BUILD=0053If your openQA is not running on port 80 on 'localhost', you can add option

--host=http://otherhost:9526 to specify a different port or host.

|

Warning

|

Use only the ISO filename in the 'client' command. You must place the

file in /var/lib/openqa/share/factory/iso. You cannot place the file elsewhere and

specify its path in the command. However, openQA also supports a

remote-download feature of assets from trusted domains.

|

For Fedora, a sample run might be:

# Run the first test

openqa-cli api -X POST isos \

ISO=Fedora-Everything-boot-x86_64-Rawhide-20160308.n.0.iso \

DISTRI=fedora \

VERSION=Rawhide \

FLAVOR=Everything-boot-iso \

ARCH=x86_64 \

BUILD=Rawhide-20160308.n.0More details on triggering tests can also be found in the Users Guide.

6. Pitfalls

Take a look at Documented Pitfalls.

openQA installation guide

7. Introduction

openQA is an automated test tool that makes it possible to test the whole installation process of an operating system. It is free software released under the GPLv2 license. The source code and documentation are hosted in the os-autoinst organization on GitHub.

This document provides the information needed to install and setup the tool, as well as information useful for everyday administration of the system. It is assumed that the reader is already familiar with the concepts of openQA and has already read the Getting Started Guide, also available at the official repository.

Continue with the next section Container based setup to setup a simple, ready-to-use container based openQA instance which is useful for a single user setup. For a quick bootstrapping under openSUSE go to Quick bootstrapping. Else, continue with the more advanced section about Custom installation. For a setup suitable to develop openQA itself, have a look at the Development setup section.

8. Container based setup

openQA is provided in containers. Multiple variants exist.

8.1. Single-instance container

The easiest and quickest way to spawn a single instance of openQA with a

single command line using the podman container engine is the following:

podman run --name openqa --device /dev/kvm -p 1080:80 -p 1443:443 --rm -it \

registry.opensuse.org/devel/openqa/containers/openqa-single-instanceOnce the startup has finished, the web UI is accessible via http://localhost:1080 or https://localhost:1443.

8.1.1. How to run a test with single-instance container in 5 minutes

8.1.2. Triggering and cloning existing jobs within single-instance container

For triggering new tests or cloning existing ones you can use openqa-cli

which is conveniently available inside the container. The quickest and easiest

way would be to enter an interactive session inside the already running

single-instance container. You can spawn a new shell via:

podman exec -ti openqa /bin/bashFrom there, you can trigger a new job or clone an existing one, e.g.:

openqa-cli schedule --monitor \

--param-file SCENARIO_DEFINITIONS_YAML=scenario-definitions.yaml \

DISTRI=example VERSION=0 FLAVOR=DVD ARCH=x86_64 \

TEST=simple_boot _GROUP_ID=0 BUILD=test \

CASEDIR=https://github.com/os-autoinst/os-autoinst-distri-example.git \

NEEDLES_DIR=%%CASEDIR%%/needles

openqa-clone-job https://openqa.opensuse.org/tests/1More details on triggering tests can also be found in the Users Guide.

You can also run the container and directly clone tests, e.g.:

podman run -e skip_suse_specifics= -e skip_suse_tests= \

--name openqa --device /dev/kvm -p 1080:80 -p 1443:443 --rm \

-it registry.opensuse.org/devel/openqa/containers/openqa-single-instance \

https://openqa.opensuse.org/tests/1The skip_suse_specifics= and skip_suse_tests= options ensure the test files

and needles required for openSUSE/SUSE tests are downloaded. Refer to the

example video above

and the get testing section for more

details.

8.2. Separate web UI and worker containers

As an alternative also separate containers are provided for both the web UI and worker.

For example the web UI container can be pulled and started using the podman

container engine:

podman run -p 1080:80 -p 1443:443 --rm -it registry.opensuse.org/devel/openqa/containers15.6/openqa_webui:latestThe worker container can be pulled and started with:

podman run --rm -it registry.opensuse.org/devel/openqa/containers15.6/openqa_worker:latest8.3. Custom configuration for containers

To supply a custom openQA config file, use the -v parameter. This also works

for the database config file. Note that if a custom database config file is

specified, no database is launched within the container.

By default, the web UI container uses the self-signed certificate that comes

with Mojolicious. To supply a different certificate, use the -v parameter.

Example for running openQA with a custom config and certificate:

podman run -p 1080:80 -p 1443:443 \

-v ./container/webui/test-cert.pem:/etc/apache2/ssl.crt/server.crt:z \

-v ./container/webui/test-key.pem:/etc/apache2/ssl.key/server.key:z \

-v ./container/webui/test-cert.pem:/etc/apache2/ssl.crt/ca.crt:z \

-v ./container/webui/conf/openqa.ini:/data/conf/openqa.ini:z \

--rm -it registry.opensuse.org/devel/openqa/containers15.6/openqa_webui:latestThe same works for the workers container where you most likely want to to

supply workers.ini and client.conf:

podman run \

-v ./container/worker/conf/workers.ini:/data/conf/workers.ini:z \

-v ./container/worker/conf/client.conf:/data/conf/client.conf:z \

--rm -it registry.opensuse.org/devel/openqa/containers15.6/openqa_worker:latestThis examples assume the working directory is an openQA checkout. To avoid doing a checkout, you can also grab the config files from the webui/conf and worker/conf directory listings on GitHub.

To learn more about how to run workers checkout the Run openQA workers section.

For creating a first test job, checkout the Triggering tests section. Note that the commands mentioned there can also be invoked within a container, e.g.:

podman run \

--rm -it registry.opensuse.org/devel/openqa/containers15.6/openqa_webui:latest \

openqa-cli --helpCheckout the containerized setup section for more details.

Take a look at openSUSE’s registry for all available container images.

8.4. Kubernetes

Find a guide and the helm charts for Kubernetes deployment of openQA in Helm README.

9. Quick bootstrapping under openSUSE

To quickly get a working openQA installation, you can use the openqa-bootstrap script. It essentially automates the steps mentioned in the Custom installation section.

9.1. Directly on your machine

On openSUSE Leap and openSUSE Tumbleweed to setup openQA on your machine simply download and execute the openqa-bootstrap script as root - it will do the rest for you:

curl -s https://raw.githubusercontent.com/os-autoinst/openQA/master/script/openqa-bootstrap | bash -xThe script is also available from an openSUSE package to install from:

zypper in openQA-bootstrap

/usr/share/openqa/script/openqa-bootstrapopenQA-bootstrap supports to immediately clone an existing job simply by

supplying openqa-clone-job parameters directly for a quickstart:

/usr/share/openqa/script/openqa-bootstrap --from openqa.opensuse.org 12345 SCHEDULE=tests/boot/boot_to_desktop,tests/x11/kontactThe above command will bootstrap an openQA installation and immediately

afterwards start a local test job clone from a test job from a remote instance

with optional, overridden parameters. More information about

openqa-clone-job can be found in

Cloning existing jobs - openqa-clone-job.

You can also run openqa-bootstrap repeatedly. For example when you stop a

container and the openQA daemons and database are stopped, calling

openqa-bootstrap start will start necessary daemons again.

9.2. openQA in a browser

You can try out openqa-bootstrap in a container environment like

GitHub Codespaces.

On GitHub openQA, click on the "Code" button and select "Codespaces". Just click on the plus sign to create a new Codespace. Or use this link as a quickstart to resume existing instances or create new ones.

It will run openqa-bootstrap in the background. If the codespace

environment is ready, open a new VSCode terminal and type

tail -f /var/log/openqa-bootstrap.log

The Web UI instance can be opened as soon as you get a popup that there is a webserver available on port 80.

You can now use openqa-clone-job to run jobs in this instance.

After stopping and resuming a codespace instance, run

/usr/share/openqa/script/openqa-bootstrap start

to start the openQA daemons again.

Be sure to delete codespace instances if you don’t use them anymore, as even stopped instances will consume storage of your monthly limit.

9.3. openQA in a container

You can also setup a systemd-nspawn container with openQA with the following commands. and you need to have no application listening on port 80 yet because the container will share the host system’s network stack.

zypper in openQA-bootstrap

/usr/share/openqa/script/openqa-bootstrap-container

systemd-run -tM openqa1 /bin/bash # start a shell in the container10. Custom installation - repositories and procedure

Keep in mind that there can be disruptive changes between openQA versions. You need to be sure that the webui and the worker that you are using have the same version number or, at least, are compatible.

For example, the packages distributed with older versions of openSUSE Leap are not compatible with the version on Tumbleweed. And the package distributed with Tumbleweed may not be compatible with the version in the development package.

10.1. Official repositories

The easiest way to install openQA is from distribution packages.

-

For SUSE Linux Enterprise (SLE), openSUSE Leap and Tumbleweed packages are available.

-

For Fedora, packages are available in the official repositories for Fedora 23 and later.

10.2. Development version repository

You can find the development version of openQA in OBS in the openQA:devel repository.

To add the development repository to your system, you can use these commands.

# openSUSE Tumbleweed

zypper ar -p 95 -f 'http://download.opensuse.org/repositories/devel:openQA/openSUSE_Tumbleweed' devel_openQA

# openSUSE Leap/SLE

zypper ar -p 95 -f 'http://download.opensuse.org/repositories/devel:openQA/$releasever' devel_openQA

zypper ar -p 90 -f 'http://download.opensuse.org/repositories/devel:openQA:Leap:$releasever/$releasever' devel_openQA_Leap|

Note

|

If you installed openQA from the official repository first, you may need to change the vendor of the dependencies. |

# openSUSE Tumbleweed and Leap

zypper dup --from devel_openQA --allow-vendor-change

# openSUSE Leap

zypper dup --from devel_openQA_Leap --allow-vendor-change10.3. Installation

10.3.1. Preparations on SLE

On SLE certain modules have to be enabled. Afterwards the instructions for openSUSE apply.

. /etc/os-release

SUSEConnect -p sle-module-desktop-applications/$VERSION_ID/$CPU

SUSEConnect -p sle-module-development-tools/$VERSION_ID/$CPU

SUSEConnect -p sle-we/$VERSION_ID/$CPU -r $sled_key

SUSEConnect -p PackageHub/$VERSION_ID/$CPU10.3.2. Installing openQA

You can install the main openQA server package using these commands.

# openSUSE

zypper in openQA

# Fedora

dnf install openqa openqa-httpdTo install the openQA worker package use the following.

# SLE/openSUSE

zypper in openQA-workerDifferent convenience packages exist for convenience in openSUSE, for example:

openQA-local-db to install the server including the setup of a local

PostgreSQL database or openQA-single-instance which sets up a web UI server,

a web proxy as well as a local worker. Install openQA-client if you only

want to interact with existing, external openQA instances.

10.3.3. Installation from sources

Installing is not required for development purposes and most components of openQA can be called directly from the repository checkout.

To install openQA from sources make sure to install all dependencies as explained in Dependencies. Then one can call

make installThe directory prefix can be controlled with the optional environment variable

DESTDIR.

From then on continue with the Basic configuration.

11. System requirements

To run tests based on the default qemu backend the following hardware specifications are recommended per openQA worker instance:

-

1x CPU core with 2x hyperthreads (or 2x CPU cores)

-

8GB RAM

-

40GB HDD (preferably SSD or NVMe)

12. Basic configuration

For a local instance setup you can simply execute the script:

/usr/share/openqa/script/configure-web-proxyThis will automatically setup a local Apache http proxy. The script

also supports NGINX and a custom port to listen on. Try --help to

learn about the available options. Read on for more detailed setup

instructions with all the details.

|

Note

|

The web proxy might not be allowed to connect to openQA when SELinux is enabled.

Therefore the configure-web-proxy script will automatically run

semanage boolean -m -1 httpd_can_network_connect on SELinux systems to change that.

|

If you wish to run openQA behind an http proxy (Apache, NGINX, …) then see the

openqa.conf.template config file in /etc/apache2/vhosts.d (openSUSE) or

/etc/httpd/conf.d (Fedora) when using apache2 or the config files in

/etc/nginx/vhosts.d for NGINX.

12.1. Apache proxy

To make everything work correctly on openSUSE when using Apache, you need to enable the 'headers', 'proxy', 'proxy_http', 'proxy_wstunnel' and 'rewrite' modules using the command 'a2enmod'. This is not necessary on Fedora.

# openSUSE Only

# You can check what modules are enabled by using 'a2enmod -l'

a2enmod headers

a2enmod proxy

a2enmod proxy_http

a2enmod proxy_wstunnel

a2enmod rewriteFor a basic setup, you can copy openqa.conf.template to openqa.conf

and modify the ServerName setting if required.

This will direct all HTTP traffic to openQA.

cp /etc/apache2/vhosts.d/openqa.conf.template /etc/apache2/vhosts.d/openqa.conf12.2. NGINX proxy

For a basic setup, you can copy openqa.conf.template to openqa.conf

and modify the server_name setting if required.

This will direct all HTTP traffic to openQA.

cp /etc/nginx/vhosts.d/openqa.conf.template /etc/nginx/vhosts.d/openqa.confNote that the default config in openqa.conf.template is using the keyword

default_server in the listen statement. This will only change the behaviour

when accessing the server via its IP address. This means that the default vhost

for localhost in nginx.conf will take precedence when accessing the server

via localhost. You might want to disable it.

If you use the openqa-upstreams.inc which is included with the upstream sources and openQA packages, you may want to customize the size of the shared memory segment according to the formula: page_size * 8

For openQA you need to set httpsonly = 0 as described in the TLS/SSL section

below, if you do not setup NGINX for SSL.

12.3. TLS/SSL

By default openQA expects to be run with HTTPS. The openqa-ssl.conf.template

Apache config file is available as a base for creating the Apache config; you

can copy it to openqa-ssl.conf and uncomment any lines you like, then

ensure a key and certificate are installed to the appropriate location

(depending on distribution and whether you uncommented the lines for key and

cert location in the config file). On openSUSE, you should also add SSL to the

APACHE_SERVER_FLAGS so it looks like this in /etc/sysconfig/apache2:

APACHE_SERVER_FLAGS="SSL"If you don’t have a TLS/SSL certificate for your host you must turn HTTPS off. You can do that in the web UI configuration:

[openid]

httpsonly = 012.4. Database

openQA uses PostgreSQL as database. By default, a database with name openqa

and geekotest user as owner is used. An automatic setup of a freshly

installed PostgreSQL instance can be done using this script.

The database connection can be configured in

the database configuration file.

(normally the [production] section is relevant). More info about the dsn

value format can be found in the DBD::Pg documentation.

12.4.1. Example for connecting to local PostgreSQL database

[production]

dsn = dbi:Pg:dbname=openqa12.4.2. Example for connecting to remote PostgreSQL database

[production]

dsn = dbi:Pg:dbname=openqa;host=db.example.org

user = openqa

password = somepassword12.5. User authentication

openQA supports three different authentication methods: OpenID (default), OAuth2 and Fake (for development).

Use the auth section in

the web UI configuration to configure

the method:

[auth]

# method name is case sensitive!

method = OpenIDIndependently of method used, the first user that logs in (if there is no admin yet) will automatically get administrator rights!

Note that only one authentication method and only one OpenID/OAuth2 provider can be configured at a time. When changing the method/provider no users/permissions are lost. However, a new and distinct user (with default permissions) will be created when logging in via a different method/provider because there is no automatic mapping of identities across different methods/providers.

For authentication to work correctly the clocks on workers and the web UI need to be in sync. The best way to achieve that is to install a service that implements the time-sync target. Otherwise a "timestamp mismatch" may be reported when clocks are too far apart.

12.5.1. OpenID

By default openQA uses OpenID with opensuse.org as OpenID provider.

OpenID method has its own openid section in

the web UI configuration:

[auth]

# method name is case sensitive!

method = OpenID

[openid]

## base url for openid provider

provider = https://www.opensuse.org/openid/user/

## enforce redirect back to https

httpsonly = 1This method supports OpenID version up to 2.0.

12.5.2. OAuth2

An additional Mojolicious plugin is required to use this feature:

# openSUSE

zypper in 'perl(Mojolicious::Plugin::OAuth2)'Example for configuring OAuth2 with GitHub:

[auth]

# method name is case sensitive!

method = OAuth2

[oauth2]

provider = github

key = mykey

secret = mysecretIn order to use GitHub for authorization, an "OAuth App" needs to be

registered on GitHub. Use …/login

as callback URL. Afterwards the key and secret will be visible to the application

owner(s).

As shown in the comments of the default configuration file, it is also possible to use different providers.

12.5.3. Fake

For development purposes only! Fake authentication bypass any authentication and

automatically allow any login requests as 'Demo user' with administrator privileges

and without password. To ease worker testing, API key and secret is created (or updated)

with validity of one day during login.

You can then use following as /etc/openqa/client.conf:

[auth]

# method name is case sensitive!

method = Fake

[localhost]

key = 1234567890ABCDEF

secret = 1234567890ABCDEFIf you switch authentication method from Fake to any other, review your API keys! You may be vulnerable for up to a day until Fake API key expires.

13. Run the web UI

To start openQA and enable it to run on each boot call

systemctl enable --now postgresql

systemctl enable --now openqa-webui

systemctl enable --now openqa-scheduler

# to use Apache as reverse proxy under openSUSE

systemctl enable apache2

systemctl restart apache2

# to use Apache as reverse proxy under Fedora

# for now this is necessary to allow Apache to connect to openQA

setsebool -P httpd_can_network_connect 1

systemctl enable httpd

systemctl restart httpdThe openQA web UI should be available on http://localhost/ now. To simply

start openQA without enabling it permanently one can simply use systemctl

start instead.

13.1. Additional considerations for zero-downtime upgrades

The main openQA web UI service (the openqa-webui-daemon script which is

usually started via the systemd unit openqa-webui.service) supports

zero-downtime upgrades

with the help of

the SO_REUSEPORT socket option.

A zero-downtime restart is triggered by sending SIGHUP to the script/service

(which can be done by reloading openqa-webui.service when using systemd which

is also what the official rpm packaging does on upgrades).

The use of SO_REUSEPORT can cause unintended connection failures which can be

circumvented via sysctl net.ipv4.tcp_migrate_req=1, see

the according article on LWN.net. Note that

there is no corresponding setting for IPv6 but the setting for IPv4 seems to

help with IPv6 connections as well.

14. Run openQA workers

Workers are services running backends to perform the actual testing. The testing is commonly performed by running virtual machines but depending on the specific backend configuration different options exist.

It is possible to run openQA workers on the same machine as the web UI as well as on different machines, even in different networks, for example instances in public cloud. The only requirement is access to the web UI host over HTTP/HTTPS. For running tests based on virtual machines KVM support is recommended.

The openQA worker is distributed as a separate package which be installed on multiple machines while still using only one web UI.

If you are using SLE make sure to add the required repos first.

# openSUSE

zypper in openQA-worker

# Fedora

dnf install openqa-workerTo allow workers to access your instance, you need to log into openQA as

operator and create a pair of API key and secret. Once you are logged in, in the

top right corner, is the user menu, follow the link 'Manage API keys'. Click

the 'Create' button to generate key and secret. There is also a script

available for creating an admin user and an API key+secret pair

non-interactively, /usr/share/openqa/script/create_admin, which can be useful

for scripted deployments of openQA. Copy and paste the key and secret into

/etc/openqa/client.conf on the machine(s) where the worker is installed. Make

sure to put in a section reflecting your webserver URL. In the simplest case,

your client.conf may look like this:

[localhost]

key = 1234567890ABCDEF

secret = 1234567890ABCDEFTo start the workers you can use the provided systemd files via:

systemctl start openqa-worker@1This will start worker number one. You can start as

many workers as you need, you just need to supply a different 'instance number'

(the number after @).

You can also run workers manually from command line.

install -d -m 0755 -o _openqa-worker /var/lib/openqa/pool/X

sudo -u _openqa-worker /usr/share/openqa/script/worker --instance XThis will run a worker manually showing you debug output. If you haven’t

installed 'os-autoinst' from packages make sure to pass --isotovideo option

to point to the checkout dir where isotovideo is, not to /usr/lib! Otherwise

it will have trouble finding its perl modules.

If you start openQA workers on a different machine than the web UI host make sure to have synchronized clocks, for example using NTP, to prevent inconsistent test results.

15. Where to now?

From this point on, you can refer to the Getting Started guide to fetch the tests cases and possibly take a look at Test Developer Guide

16. Advanced configuration

16.1. Cleanup

The automated cleanup is enabled and configured by default. Cleanup tasks are

scheduled via systemd timer units and run via openqa-gru.service. The configuration

is done in

the web UI configuration file and

various places within the web UI. If you want to tweak the cleanup to your

needs, have a look at the

Cleanup of assets, results and other data

section.

16.2. Setting up git support

If your tests and needles are stored in git, openQA can perform various operations:

-

Automatically commit needles created in the web UI editor back to the repository

-

Automatically update the repository when scheduling tests

-

Update the server’s tests and needles from git repos specified in a job’s

CASEDIRandNEEDLES_DIRvariables -

Attempt to have the web UI display the correct needles each job was executed with via temporary git checkouts, based on its variables

By default, cloning based on CASEDIR and NEEDLES_DIR is enabled, but the other

features are disabled. To control these features, you can use these config settings:

[scm git]

git_auto_commit = yes|no|''

git_auto_clone = yes|no

git_auto_update = yes|no

checkout_needles_sha = yes|no-

git_auto_commitcontrols whether needles saved in the web UI editor are automatically committed. For backwards compatibility, settingscmin the[global]section to 'git' also enables this feature, ifgit_auto_commitis not set exactly to 'no' (its default value is the empty string ''). -

git_auto_updatecontrols automatic test/needle updating when scheduling tests. -

git_auto_clonecontrols the automatic cloning of repos referenced byCASEDIRandNEEDLES_DIR, at job schedule time. -

checkout_needles_shacontrols the feature whereby, when a job viewed in the web UI has variables indicating the needles came from a specific git repository and ref, openQA will attempt to clone that ref and display the needles from it.

16.2.1. Configuration of automatic needle commit feature

You may want to add some description to automatic commits coming from the web

UI.

You can do so by setting your configuration in the repository

(/var/lib/os-autoinst/needles/.git/config) to some reasonable defaults such as:

[user]

email = whatever@example.com

name = openQA web UITo enable automatic pushing of the repo as well, you need to add the following to the web UI configuration:

[scm git]

do_push = yesDepending on your setup, you might need to generate and propagate ssh keys for user 'geekotest' to be able to push.

It might also be useful to rebase first. To enable that, add the remote to get the latest updates from and the branch to rebase against to your openqa.ini:

[scm git]

update_remote = origin

update_branch = origin/masterIf rebasing, it may be useful to perform a hard reset of the local repository to ensure that the rebase will not fail. To enable that, add the following to your openqa.ini (along with the previous snippet):

[scm git]

do_cleanup = yesIf you clone the needle repository via HTTP, you can still make geekotest

able to push via SSH with a git configuration. For GitHub, it would look like

this:

git config --global url."git@github.com:".pushInsteadOf https://github.com/This way git push will automatically rewrite HTTP urls to SSH for every

repository, even if it’s already cloned.

Or put it in the ~/.gitconfig file manually:

[url "git@github.com:"]

pushInsteadOf = https://github.com/You can apply the same kind of thing for any other git hosting provider.

16.3. Referer settings to auto-mark important jobs

Automatic cleanup of old results (see GRU jobs) can sometimes render important tests useless. For example bug report with link to openQA job which no longer exists. Job can be manually marked as important to prevent quick cleanup or referer can be set so when job is accessed from particular web page (for example bugzilla), this job is automatically labeled as linked and treated as important.

List of recognized referrers is space separated list configured in the web UI configuration file:

[global]

recognized_referers = bugzilla.suse.com bugzilla.opensuse.org16.4. Worker settings

Default behavior for all workers is to use the QEMU backend and connect to

http://localhost. If you want to change some of those options, you can do so

in the worker configuration. For

example to point the workers to the FQDN of your host (needed if test cases need

to access files of the host) use the following setting:

[global]

HOST = http://openqa.example.comOnce you got workers running they should show up in the admin section of openQA in the workers section as 'idle'. When you get so far, you have your own instance of openQA up and running and all that is left is to set up some tests.

16.5. Further systemd units for the worker

The following information is partially openSUSE specific. The openQA-worker

package provides further systemd units:

-

openqa-worker-plain@.service: standard worker service, this is the default andopenqa-worker@.serviceis just a symlink to this service -

openqa-worker-no-cleanup@.service: see enabling snapshots -

openqa-worker-auto-restart@.service: worker that restarts automatically after processing assigned jobs -

openqa-worker-cacheservice/openqa-worker-cacheservice-minion: services for the asset cache -

openqa-worker.target-

Starts

openqa-worker@.service(but no other worker units) when started.-

The number of started worker slots depends on the pool directories present under

/var/lib/openqa/pool. This information is determined via a systemd generator and can be refreshed viasystemctl daemon-reload.

-

-

Stops

openqa-worker-no-cleanup@.serviceand other units conflicting withopenqa-worker@.servicewhen started. -

Stops/restarts all worker units when stopped/restarted.

-

Is restarted automatically when the

openQA-workerpackage is updated (unlessDISABLE_RESTART_ON_UPDATE="yes"is set in/etc/sysconfig/services).

-

-

openqa-reload-worker-auto-restart@.path: allows to restart the worker service automatically on configuration changes without interrupting jobs (see next section for details)

16.5.1. Stopping/restarting workers without interrupting currently running jobs

It is possible to stop a worker as soon as it becomes idle and immediately if it

is already idling by sending SIGHUP to the worker process.

When the worker is setup to be always restarted (e.g. using a systemd unit

with Restart=always like openqa-worker-auto-restart@*.service) this leads

to the worker being restarted without interrupting currently running jobs. This

can be useful to apply configuration changes and updates without interfering

ongoing testing. Example:

systemctl reload 'openqa-worker-auto-restart@*.service' # sends SIGHUP to workerThere is also the systemd unit openqa-reload-worker-auto-restart@.path which

invokes the command above (for the specified slot) whenever the worker configuration

under /etc/openqa/workers.ini changes. This unit is not enabled by default and

only affects openqa-worker-auto-restart@.service but not other worker services.

This kind of setup makes it easy to take out worker slots temporarily without interrupting currently running jobs:

# prevent worker services from restarting and being automatically reloaded

systemctl stop openqa-reload-worker-auto-restart@{1..28}.{service,path}

systemctl mask openqa-worker-auto-restart@{1..28}.service

# ensure idling worker services stop now (`--kill-who=main` ensures only the

# worker receives the signal and *not* isotovideo)

systemctl kill --kill-who=main --signal HUP openqa-worker-auto-restart@{1..28}16.6. Configuring remote workers

There are some additional requirements to get remote worker running. First is to

ensure shared storage between openQA web UI and workers.

Directory /var/lib/openqa/share contains all required data and should be

shared with read-write access across all nodes present in openQA cluster.

This step is intentionally left on system administrator to choose proper shared

storage for her specific needs.

Example of NFS configuration:

NFS server is where openQA web UI is running. Content of /etc/exports

/var/lib/openqa/share *(fsid=0,rw,no_root_squash,sync,no_subtree_check)NFS clients are where openQA workers are running. Run following command:

mount -t nfs openQA-webUI-host:/var/lib/openqa/share /var/lib/openqa/share16.7. Configuring AMQP message emission

You can configure openQA to send events (new comments, tests finished, …) to an AMQP message bus. The messages consist of a topic and a body. The body contains json encoded info about the event. See amqp_infra.md for more info about the server and the message topic format. There you will find instructions how to configure the AMQP server as well.

To let openQA send messages to an AMQP message bus,

first make sure that the perl-Mojo-RabbitMQ-Client RPM is installed.

Then you will need to configure AMQP in

the web UI configuration file:

# Enable the AMQP plugin

[global]

plugins = AMQP

# Configuration for AMQP plugin

[amqp]

heartbeat_timeout = 60

reconnect_timeout = 5

# guest/guest is the default anonymous user/pass for RabbitMQ

url = amqp://guest:guest@localhost:5672/

exchange = pubsub

topic_prefix = suseFor a TLS connection use amqps:// and port 5671.

16.8. Configuring worker to use more than one openQA server

When there are multiple openQA web interfaces (openQA instances) available a worker can be configured to register and accept jobs from all of them.

Requirements:

-

/etc/openqa/client.confmust contain API keys and secrets to all instances -

Shared storage from all instances must be properly mounted

In the worker configuration, enter space-separated instance hosts and optionally configure where the shared storage is mounted. Example:

[global]

HOST = openqa.opensuse.org openqa.fedora.fedoraproject.org

[openqa.opensuse.org]

SHARE_DIRECTORY = /var/lib/openqa/opensuse

[openqa.fedoraproject.org]

SHARE_DIRECTORY = /var/lib/openqa/fedoraConfiguring SHARE_DIRECTORY is not a hard requirement. Workers will try following

directories prior registering with openQA instance:

-

SHARE_DIRECTORY -

/var/lib/openqa/$instance_host -

/var/lib/openqa/share -

/var/lib/openqa -

fail if none of above is available

Once a worker registers to an openQA instance, scheduled jobs (of matching worker class) can be assigned to it. Dependencies between jobs will be considered for ordering the job assignment. It is possible to mix local openQA instance with remote instances or use only remote instances.

16.9. Asset and test/needle caching

If your network is slow or you experience long time to load needles you might

want to consider enabling caching on your remote workers. To enable caching,

CACHEDIRECTORY must be set in

the worker configuration. There are

also further settings one can optionally configure. Example:

[global]

HOST = http://webui

CACHEDIRECTORY = /var/lib/openqa/cache # desired cache location

CACHELIMIT = 50 # max. cache size in GiB, defaults to 50

CACHE_MIN_FREE_PERCENTAGE = 10 # min. free disk space to preserve in percent

CACHEWORKERS = 5 # number of parallel cache minion workers, defaults to 5

[http://webui]

TESTPOOLSERVER = rsync://yourlocation/tests # also cache tests (via rsync)The specified CACHEDIRECTORY must exist and must be writable by the cache

service (which usually runs as _openqa-worker user). If you install

openQA through the repositories, said directory will be created for you.

The shown configuration causes workers to download the assets from the web UI

and use them locally. The TESTPOOLSERVER setting causes also tests and

needles to be downloaded via rsync from the specified location. You can find

further examples in the comments in

the worker configuration.

It is suggested to have the cache and pool directories on the same filesystem to ensure assets used by tests are available as long as needed. This is achieved by using hard links, resorting to symlinks in other cases with the risk of assets being deleted from the cache before tests relying on these assets end.

The caching is provided by two additional services which need to be started on the worker host:

systemctl enable --now \

openqa-worker-cacheservice openqa-worker-cacheservice-minionThe rsync server daemon needs to be configured and started on the web UI host.

Example /etc/rsyncd.conf:

gid = users

read only = true

use chroot = true

transfer logging = true

log format = %h %o %f %l %b

log file = /var/log/rsyncd.log

pid file = /var/run/rsyncd.pid

slp refresh = 300

use slp = false

[tests]

path = /var/lib/openqa/share/tests

comment = openQA test distributionssystemctl enable --now rsyncd16.10. Alternative caching implementations

Caching described above works well for a single worker host, but in case of

several hosts in a single site (that is remote from the main openQA webui

instance) it results in downloading the same assets several times. In

such case, one can setup local cache on their own (without using

openqa-worker-cacheservice service) and share it with workers using

some network filesystem (see [Configuring remote workers]

section above).

Such setups can use SYNC_ASSETS_HOOK in

the web UI configuration to ensure the

cache is up to date before starting the job (or resuming test in developer

mode). The setting takes a shell command that is executed just before

evaluating assets. It is up to the system administrator to decide what it

should do, but there are few suggestions:

-

Call rsync, possibly via ssh on the cache host

-

Wait for a lock file signaling that cache download is in progress to disappear

If the command exits with code 32, re-downloading needles in developer mode will be skipped.

16.11. Enable linking files referred by job settings

Specific job settings might refer to files within the test distribution.

You can configure openQA to display links to these files within the job settings tab.

To enable particular settings to be presented as a link within the settings tab

one can setup the relevant keys in /etc/openqa/openqa.ini.

[job_settings_ui]

keys_to_render_as_links=FOO,AUTOYASTThe files referenced by the configured keys should be located either under the root

of CASEDIR or the data folder within CASEDIR.

16.12. Enable custom hook scripts on "job done" based on result

If a job is done, especially if no label could be found for carry-over, often more steps are needed for the review of the test result or providing the information to either external systems or users. As there can be very custom requirements openQA offers a point for optional configuration to let the instance administrators define specific actions.

By setting custom hooks it is possible to call external scripts defined in either environment variables or config settings.

If an environment variable corresponding to the job result is found following

the name pattern OPENQA_JOB_DONE_HOOK_$RESULT, any executable specified in

the variable as absolute path or executable name in $PATH is called with the

job ID as first and only parameter. For example for a job with result

"failed", the corresponding environment variable would be

OPENQA_JOB_DONE_HOOK_FAILED. As alternative to an environment variable a

corresponding config variable in the section [hooks] in lower-case without

the OPENQA_ prefix can be used in the format job_done_hook_$result. The

corresponding environment value has precedence. The exit code of the

externally called script is not evaluated and will have no effect.

It is also possible to specify one general hook script via job_done_hook and

enable that one for specific results via e.g. job_done_hook_enable_failed = 1.

The job setting _TRIGGER_JOB_DONE_HOOK=0 allows to disable the hook script

execution for a particular job. It is also possible to specify

_TRIGGER_JOB_DONE_HOOK=1 to execute the general hook script configured via

job_done_hook regardless of the result.

The execution time of the script is by default limited to five minutes. If the

script does not terminate after receiving SIGTERM for 30 seconds it is

terminated forcefully via SIGKILL. One can change that by setting the

environment variables OPENQA_JOB_DONE_HOOK_TIMEOUT and

OPENQA_JOB_DONE_HOOK_KILL_TIMEOUT to the desired timeouts. The format from the

timeout command is used (see timeout --help).

For example there is already an approach called "auto-review" https://github.com/os-autoinst/scripts/#auto-review---automatically-detect-known-issues-in-openqa-jobs-label-openqa-jobs-with-ticket-references-and-optionally-retrigger which offers helpful, external scripts. Config settings for openqa.opensuse.org enabling the auto-review scripts could look like:

[hooks]

job_done_hook_incomplete = /opt/openqa-scripts/openqa-label-known-issues-hook

job_done_hook_failed = /opt/openqa-scripts/openqa-label-known-issues-hookor for a host openqa.example.com:

[hooks]

job_done_hook_incomplete = env host=openqa.example.com /opt/openqa-scripts/openqa-label-known-issues-hook

job_done_hook_failed = env host=openqa.example.com /opt/openqa-scripts/openqa-label-known-issues-hookThe environment variable should be set in a systemd service override for the

GRU service. A corresponding systemd override file

/etc/systemd/system/openqa-gru.service.d/override.conf could look like this:

[Service]

Environment="OPENQA_JOB_DONE_HOOK_INCOMPLETE=/opt/os-autoinst-scripts/openqa-label-known-issues-hook"When using apparmor the called hook scripts must be covered by according

apparmor rules, for example for the above in

/etc/apparmor.d/usr.share.openqa.script.openqa:

/opt/os-autoinst-scripts/** rix,

/usr/bin/cat rix,

/usr/bin/curl rix,

/usr/bin/jq rix,

/usr/bin/mktemp rix,

/usr/share/openqa/script/client rix,Additions should be added to /etc/apparmor.d/local/usr.share.openqa.script.openqa

after which the apparmor service needs to be restarted for changes to take effect.

Note that in case of symlinks the target must be specified, and the link itself is irrelevant. So

for example Can’t exec "/bin/sh" can occur if /bin/sh is a link to a path that’s not allowed.

Apparmor denials and stderr output of the hook scripts are visible in the system logs

of the openQA GRU service, except for messages in "complain" mode which end up in audit.log.

General status and stdout output is visible in the GRU minion job dashboard on the route

/minion/jobs?offset=0&task=finalize_job_results of the openQA instance.

16.13. Automatic cloning of incomplete jobs

By default, when a worker reports an incomplete job due to a cache service related

problem, the job is automatically cloned. It is possible to extend the regex to cover

other types of incompletes as well by adjusting auto_clone_regex in the global

section of the config file. It is also possible to assign 0 to prevent the automatic

cloning.

Note that jobs marked as incomplete by the stale job detection are not affected by this configuration and cloned in any case.

16.14. Enable automatic database backup

An optional systemd service, openqa-dump-db.service, can be enabled to

perform daily database backups. This service is triggered by the

openqa-dump-db.timer. To enable automatic database backup, run:

systemctl enable --now openqa-dump-db.timerBackups are stored at /var/lib/openqa/backup.

17. Auditing - tracking openQA changes

Auditing plugin enables openQA administrators to maintain overview about what is happening with the system. Plugin records what event was triggered by whom, when and what the request looked like. Actions done by openQA workers are tracked under user whose API keys are workers using.

Audit log is directly accessible from Admin menu.

Auditing, by default enabled, can be disabled by global configuration option in the web UI configuration file:

[global]

audit_enabled = 0The audit section of

the web UI configuration allows to

exclude some events from logging using a space separated blocklist:

[audit]

blocklist = job_grab job_doneThe audit/storage_duration section of

the web UI configuration allows to set

the retention policy for different audit event types:

[audit/storage_duration]

startup = 10

jobgroup = 365

jobtemplate = 365

table = 365

iso = 60

user = 60

asset = 30

needle = 30

other = 15In this example events of the type startup would be cleaned up after 10 days, events related to

job groups after 365 days and so on. Events which do not fall into one of these categories would be

cleaned after 15 days. By default, cleanup is disabled.

Use systemctl enable --now openqa-enqueue-audit-event-cleanup.timer to schedule the cleanup

automatically every day. It is also possible to trigger the cleanup manually by invoking

/usr/share/openqa/script/openqa minion job -e limit_audit_events.

17.1. List of events tracked by the auditing plugin

-

Assets:

-

asset_register asset_delete

-

-

Workers:

-

worker_register command_enqueue

-

-

Jobs:

-

iso_create iso_delete iso_cancel

-

jobtemplate_create jobtemplate_delete

-

job_create job_grab job_delete job_update_result job_done jobs_restart job_restart job_cancel job_duplicate

-

jobgroup_create jobgroup_connect

-

-

Tables:

-

table_create table_update table_delete

-

-

Users:

-

user_update user_login user_deleted

-

-

Comments:

-

comment_create comment_update comment_delete

-

-

Needles:

-

needle_delete needle_modify

-

Some of these events are very common and may clutter audit database. For this reason job_grab and job_done

events are on the blocklist by default.

18. Automatic system upgrades and reboots of openQA hosts

The distribution package openQA-auto-update offers automatic system

upgrades and reboots of openQA hosts. To use that feature install the package

openQA-auto-update and enable the corresponding systemd timer:

systemctl enable openqa-auto-update.timerThis triggers a nightly system upgrade which first looks into configured openQA

repositories for stable packages, then conducts the upgrade and schedules

reboots during the configured reboot maintenance windows using rebootmgr.

As an alternative to the systemd timer the script

/usr/share/openqa/script/openqa-auto-update can be called when desired. The

script also supports cache cleanup preserving a certain number of versions per

package. Check its helptext for details.

The distribution package openQA-continuous-update can be used to continuously

upgrade the system. It will frequently check whether devel:openQA contains

updates and if it does it will upgrade the whole system. This approach is

independent of openQA-auto-update but can be used complementary. The

configuration is analogous to openQA-auto-update.

19. Migrating from older databases

For older versions of openQA, you can migrate from SQLite to PostgreSQL according to DB migration from SQLite to PostgreSQL.

For migrating from older PostgreSQL versions read on.

20. Migrating PostgreSQL database on openSUSE

The PostgreSQL data-directory needs to be migrated in order to switch to a

newer major version of PostgreSQL. The following instructions are specific to

openSUSE’s PostgreSQL and openQA packaging but with a little adaption they can

likely be used for other setups as well. These instructions can migrate big

databases in seconds without requiring additional disk space. However, services

need to be stopped during the (short) migration.

-

Locate the

data-directory. Its path is configured in/etc/sysconfig/postgresqland should be/var/lib/pgsql/databy default. The paths in the next steps assume the default. -

To ease migrations, it is recommended making the

data-directory a symlink to a versioned directory. So the file system layout would look for example like this:$ sudo -u postgres ls -l /var/lib/pgsql | grep data lrwxrwxrwx 1 root root 7 8. Sep 2019 data -> data.10 drwx------ 20 postgres postgres 4096 30. Aug 00:00 data.10 drwx------ 20 postgres postgres 4096 8. Sep 2019 data.96The next steps assume such a layout.

-

Install same set of postgresql* packages as are installed for the old version:

oldver=10 newver=12 sudo zypper in postgresql$newver-server postgresql$newver-contrib -

Change to a directory where the user postgres will be able to write logs to, e.g.:

cd /tmp -

Prepare the migration:

sudo -u postgres /usr/lib/postgresql$newver/bin/initdb [locale-settings] -D /var/lib/pgsql/data.$newverImportantBe sure to use initdb from the target version (like it is done here) and also no newer version which is possibly installed on the system as well. ImportantLookup the locale settings in /var/lib/pgsql/data.$oldver/postgresql.confor viasudo -u geekotest psql openqa -c 'show all;' | grep lc_to pass locale settings listed byinitdb --helpas appropriate. On some machines additional settings need to be supplied, e.g. from an older database version on openqa.opensuse.org it was necessary to pass the following settings:--encoding=UTF8 --locale=en_US.UTF-8 --lc-collate=C --lc-ctype=en_US.UTF-8 --lc-messages=C --lc-monetary=C --lc-numeric=C --lc-time=C -

Take over any relevant changes from the old config to the new one, e.g.:

sudo -u postgres vimdiff \ /var/lib/pgsql/data.$oldver/postgresql.conf \ /var/lib/pgsql/data.$newver/postgresql.confImportantThere shouldn’t be a diff in the locale settings, otherwise pg_upgradewill complain. -

Shutdown postgres server and related services as appropriate for your setup, e.g.:

sudo systemctl stop openqa-{webui,websockets,scheduler,livehandler,gru} sudo systemctl stop postgresql -

Perform the migration:

sudo -u postgres /usr/lib/postgresql$newver/bin/pg_upgrade --link \ --old-bindir=/usr/lib/postgresql$oldver/bin \ --new-bindir=/usr/lib/postgresql$newver/bin \ --old-datadir=/var/lib/pgsql/data.$oldver \ --new-datadir=/var/lib/pgsql/data.$newverImportantBe sure to use pg_upgrade from the target version (like it is done here) and also no newer version which is possibly installed on the system as well. Checkout the PostgreSQL documentation for details. NoteThis step only takes a few seconds for multiple production DBs because the --linkoption is used. -

Change symlink (shown in step 2) to use the new data directory:

sudo ln --force --no-dereference --relative --symbolic /var/lib/pgsql/data.$newver /var/lib/pgsql/data -

Start services again as appropriate for your setup, e.g.:

sudo systemctl start postgresql sudo systemctl start openqa-{webui,websockets,scheduler,livehandler,gru}NoteThere is no need to take care of starting the new version of the PostgreSQL service. The start script checks the version of the data directory and starts the correct version. -

Check whether usual role and database are present and running on the new version:

sudo -u geekotest psql -c 'select version();' openqa -

Remove old postgres packages if not needed anymore:

sudo zypper rm postgresql$oldver-server postgresql$oldver-contrib postgresql$oldver -

Delete the old data directory if not needed anymore:

sudo -u postgres rm -r /var/lib/pgsql/data.$oldver

21. Working on database-related performance problems

Without extra setup, PostgreSQL already gathers many statistics, checkout the official documentation.

21.1. Enable further statistics

These statistics help to identify the most time-consuming queries.

-

Configure the PostgreSQL extension

pg_stat_statements, see example on the official documentation. -

Ensure the extension library is installed which might be provided by a separate package (e.g.

postgresql14-contribfor PostgreSQL 14 on openSUSE). -

Restart PostgreSQL.

-

Enable the extension via

CREATE EXTENSION pg_stat_statements.

21.1.1. Make use of these statistics

Simply query the table pg_stat_statements. Use \x in psql for extended

mode or substring() on the query parameter for readable output. The columns

are explained in the previously mentioned documentation. Here an example to show

similar queries which are most time-consuming:

SELECT

substring(query from 0 for 250) as query_start, sum(calls) as calls, max(max_exec_time) as max_exec_time,

sum(total_exec_time) as total_exec_time, sum(rows) as rows

FROM pg_stat_statements group by query_start ORDER BY total_exec_time DESC LIMIT 10;After significant schema changes consider resetting query statistics (SELECT

pg_stat_statement_reset()) and checking the query plans (EXPLAIN (ANALYZE,

BUFFERS) …) for the slowest queries showing up afterwards to make sure they

are using indexes (and not just sequential scans).

21.2. Further things to try

-

Try to tweak database configuration parameters. For example increasing

work_meminpostgresql.confmight help with some heavy queries. -

Run

VACUUM VERBOSE ANALYZE table_name;for any table that shows to be impacting the performance. This can take some seconds or minutes but can help to improve performance in particular after bigger schema migrations for example type changes.

21.3. Further resources

-

Checkout the official documentation for more details about

EXPLAIN. There is also service for formatting those explanations to be more readable. -

Checkout the official documentation for more details about

VACUUM ANALYZE. -

Checkout the following documentation pages.

22. Filesystem layout

Tests, needles, assets, results and working directories (a.k.a. "pool directories") are located in certain

subdirectories within /var/lib/openqa. This directory is configurable (see

Customize base directory). Here we assume the default is in place.

Note that the sub directories within /var/lib/openqa must be accessible by the user that runs the openQA web UI

(by default 'geekotest') or by the user that runs the worker/isotovideo (by default '_openqa-worker').

These are the most important sub directories within /var/lib/openqa:

-

dbcontains the web UI’s database lockfile -

imagesis where the web UI stores test screenshots and thumbnails -

testresultsis where the web UI stores test logs and test-generated assets -

webuiis where the web UI stores miscellaneous files -

poolcontains working directories of the workers/isotovideo -

sharecontains directories shared between the web UI and (remote) workers, can be owned by root -

share/factorycontains test assets and temp directory, can be owned by root but sysadmin must create subdirs -

share/factory/isoandshare/factory/iso/fixedcontain ISOs for tests -

share/factory/hddandshare/factory/hdd/fixedcontain hard disk images for tests -

share/factory/repoandshare/factory/repo/fixedcontain repositories for tests -

share/factory/otherandshare/factory/other/fixedcontain miscellaneous test assets (e.g. kernels and initrds) -

share/factory/tmpis used as a temporary directory (openQA will create it if it ownsshare/factory) -

share/testscontains the tests themselves